Need GPUs? Ship without a waitlist.

Get started

High-Performance Computing, often referred to as HPC, plays a crucial role in modern fields like artificial intelligence (AI) and large-scale data analysis. These advanced systems process vast datasets and solve complex problems multiple times faster than regular computers. They enable researchers to advance climate modeling, drug discovery, autonomous vehicle training, and numerous other applications. This makes HPC a vital tool for organizations developing advanced technologies in real-time scenarios.

This comprehensive guide explains what HPC is, how it works, where it is used, different deployment methods, its benefits, challenges, and future trends.

High-performance computing refers to systems comprising hundreds or thousands of interconnected servers that work together as a unified platform. These powerful systems, known as clusters or supercomputers, process extensive datasets and perform complex calculations at extraordinary speeds. Unlike a standard computer, which executes tasks sequentially, an HPC system divides problems into smaller parts and solves them simultaneously across many processors.

Organizations are increasingly deploying these systems across many hybrid cloud environments to strike a balance between performance and flexibility. HPC delivers three critical qualities: speed, scale, and precision. It helps organizations run complex simulations, improve algorithms, train powerful AI models, and handle massive amounts of data in a fraction of the time.

In simple terms, HPC turns tasks that would normally be too heavy for regular computers into fast, efficient, and highly manageable processes.

Modern research, AI development, and industrial applications rely on computing systems that can handle massive datasets, complex models, and real-time processing. HPC makes this possible by offering capabilities that traditional systems cannot match. The key advantages include:

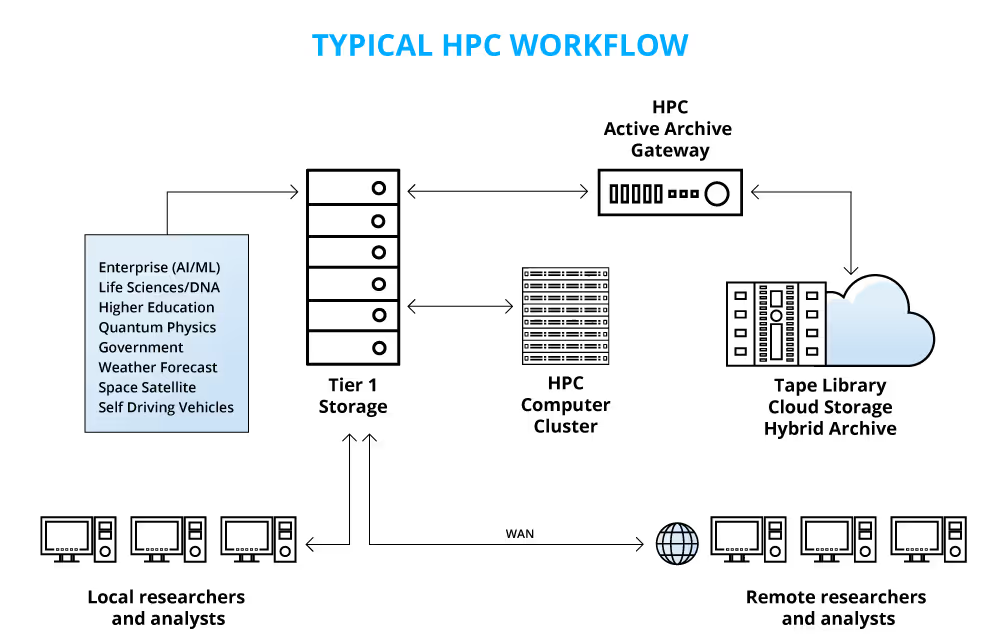

HPC systems are built quite differently from regular IT infrastructure. A typical server typically operates independently, but an HPC cluster combines multiple servers to function as a single, powerful system. Below are the four main components that make up the architecture of an HPC system:

Compute nodes are the individual servers that make up an HPC cluster. Each node has its own CPUs, GPUs, memory, and local storage. There are mainly two kinds of nodes:

Many modern HPC systems combine CPU nodes (for logic and control) with GPU nodes. For instance, in a large-scale climate model, the GPU-accelerated stencil computations allow faster resolution of the atmospheric physics phenomena.

The overall speed of an HPC cluster largely depends on its network infrastructure. Compute nodes must exchange data at extremely high speeds to operate as a unified system.

Common technologies used to connect these nodes are:

These connections reduce delays, keep all the nodes in sync, and allow parallel computations to run efficiently.

Parallelism makes HPC systems fast by breaking large problems into smaller tasks and running them on many computers at exactly the same time.

Common parallel computing models include:

HPC systems produce huge amounts of data, including simulation results, model checkpoints, logs, and raw scientific data. Storage must handle high-speed transfers and thousands of simultaneous accesses efficiently.

Managing this requires:

HPC is widely used in industries that need fast data processing, simulations, or advanced computations. Below are some of the key applications.

Scientists use HPC to simulate complex natural systems, such as climate patterns, weather forecasts, earthquakes, and astrophysical phenomena. These applications demand significant computational power and high accuracy. For example, coupling weather prediction with hydrological models on a UK-based HPC cluster allowed more accurate extreme weather impact studies.

HPC lets engineers and manufacturers perform advanced physics-based simulations to test the designs before making prototypes. Automakers, for example, use HPC-powered simulations for crash testing, airflow analysis, and complex materials design, reducing development time and improving safety standards. Parallel computing helps them run multiple design scenarios simultaneously, making product development more efficient and cost-effective.

Training AI models and running deep learning experiments need massive computing power. HPC provides the GPU resources and parallel processing required to handle large datasets and complex models efficiently. For instance, large-scale AI models (e.g., GPT-4) run on HPC clusters with thousands of GPUs (HPCClusterScape), supporting scalable training and rapid inference capabilities.

In medicine, HPC accelerates research and diagnostics and is used for protein folding, medical imaging, and drug discovery. This enables researchers to perform molecular dynamics simulations and analyze vast chemical libraries to identify potential drug candidates. During the COVID-19 pandemic, international supercomputing alliances supported protein structure simulations and accelerated public health research.

The energy sector uses HPC to perform massive simulations for oil and gas exploration, reservoir modeling, and even engine combustion studies. HPC allows researchers to analyze large datasets from sensors and geological surveys, speeding up discoveries and improving energy efficiency. These capabilities support innovations like safer fuel injection systems and more effective renewable energy integration solutions.

Media studios depend on HPC clusters to render complex animations, visual effects, and real-time graphics for films and interactive media. Studies show that GPU-based HPC systems cut render times dramatically and allow complex, high-resolution visualizations and effects to be produced that were previously impossible or too slow. Modern HPC makes it feasible to process huge amounts of graphics data for immersive interactive performances and faster content delivery pipelines.

Organizations choose an HPC model based on their cost, scale, regulatory requirements, and specific business needs. Some of the most common options are discussed below:

This traditional setup runs HPC systems in an organization’s own data center. It offers strong control but requires heavy management overhead.

Pros

Cons

Cloud-based HPC lets organizations rent computing power as needed. It is flexible and quick to set up without any physical hardware.

Pros

Cons

A hybrid model combines both on-premises and cloud-based systems, combining control with flexibility.

Pros

Cons

Organizations adopt HPC to work more efficiently, handle larger datasets, and achieve better results. Some of the primary benefits include:

Despite its advantages, HPC comes with some significant difficulties, such as:

HPC is changing fast as new technologies like AI, cloud, and edge computing continue to grow. Here are some of the key trends shaping its future:

Exascale systems can handle one quintillion floating-point calculations every second, which is 1,000 times faster than petascale systems. These massive supercomputers make it possible to run highly accurate scientific simulations and solve complex real-world problems.

HPC and AI now work hand in hand. AI models rely on powerful HPC systems for training, while HPC tasks use AI to improve performance and automate various processes.

GPUs were once mostly used for graphics work, but their parallel computing capabilities made them suitable for deep learning and large-scale scientific computing. Modern HPC clusters now run thousands of GPUs, allowing them to handle extensive AI and data workloads far faster than CPUs.

Energy use in HPC is being reduced through improved cooling and more efficient task management practices. Data centers are also starting to use renewable energy to become more eco-friendly. Similarly, cloud providers are adopting chilled water and external air for cooling. For instance, Modern HPC facilities like Core Scientific’s AI data centers use liquid cooling and renewable energy sources to reduce carbon footprints while supporting large foundation models.

HPC systems are being set up near the devices and sensors that generate data. This helps handle real-time tasks like robotics, self-driving cars, and live data analysis more efficiently. For instance, edge HPC nodes are deployed in autonomous robotics (e.g., Boston Dynamics Spot robot uses onboard GPUs for local mapping and obstacle avoidance) and self-driving vehicles, where NVIDIA Jetson platforms process sensor data for navigation in real time.

Modern HPC setups increasingly use cloud technologies such as containers, Kubernetes, and scalable GPU clusters to make computing resources more flexible and accessible.

High-performance computing is essential for modern scientific research, AI development, and industrial innovation. However, managing HPC workloads across different environments creates operational complexity that can slow down your progress.

emma simplifies your HPC operations by providing unified multi-cloud management. It enables organizations to control HPC clusters both on-premises and in public clouds, such as AWS, Azure, and Google Cloud, through unified cloud management, allowing them to use resources efficiently while ensuring compliance with security policies.

With emma, you can:

emma makes managing GPU resources for AI and scientific computing easier, boosting performance and reducing the complexity of HPC infrastructure operations.

Do not let multi-cloud complexity limit your HPC capabilities. Request a demo to see how emma can help you control, optimize, and scale your hybrid HPC infrastructure efficiently.