Need GPUs? Ship without a waitlist.

Get started

Strategic Insights for Modern IT Architecture

Enterprise computing has advanced beyond simply choosing between the cloud's strength and the edge's distributed speed to build a unified architecture. The cloud centralizes data in large data centers, while the edge brings processing closer to sensors and devices. These architectural differences shape where each approach excels and where it faces limitations.

This distinction is even more critical as AI adoption rises, data regulations become stricter, and system complexity increases. The key question isn't whether to use both, but how to decide where to run workloads and manage the system effectively.

This article will break down those strategic considerations of cloud and edge. And also explain how modern tools and principles apply across the continuum.

Cloud computing delivers on-demand IT resources (compute, storage, networking, databases, and services) accessible from multiple remote servers. These resources are housed in geographically distributed data centers operated by hyperscalers (large cloud service providers) such as AWS, Azure, and Google Cloud.

Simply put, cloud computing hosts data and applications on virtual “Cloud” servers via the Internet and enables users to access them from anywhere.

To maximize the strategic value of the cloud, architects must consider several advanced factors that go beyond its foundational infrastructure.

Hyperscale cloud providers use multiple regions and Availability Zones to make their infrastructure reliable. This setup enables them to run highly available, disaster-resistant applications worldwide. They also use global traffic management and Content Delivery Networks (CDNs) to deliver content quickly and with low delay to users everywhere.

Using cloud services shifts the responsibility for hardware maintenance, power, cooling, and security to cloud providers. It allows company teams to focus more on developing applications, analyzing large data, and building business solutions.

Cloud excels at aggregating large datasets. Services like AWS Kinesis, Kafka-as-a-service, and Azure Event Hubs collect streaming data into cloud data lakes (S3, Blob Storage) for large-scale analytics or ML training.

This “data gravity” makes the cloud the center for data-heavy workloads. However, feeding petabytes of edge data (video streams) to the cloud can overload networks and lead to high costs.

While cloud services can save costs, poor management can also lead to cloud sprawl (uncontrolled usage of cloud resources). To avoid this, organizations need to implement FinOps practices by using right-sizing instances, using reserved/spot instances, adopting serverless models, and tagging resources for cost tracking.

Also, use an intelligent platform like emma, which can automatically optimize resource use across different providers and provide cost visibility and rightsizing recommendations.

Cloud providers operate on a shared responsibility model. They secure the underlying infrastructure (data centers, hypervisors), while customers secure their applications and data (firewalls, IAM, encryption).

Beyond its technical architecture, the strategic value of cloud computing is rooted in several key business drivers:

Innovation velocity: A vast catalog of managed services (AI/ML platforms, IoT hubs, analytics, blockchain, quantum previews) enables teams to experiment and deploy new features rapidly without building everything from scratch. And it lets businesses innovate faster and get their products to market more quickly.

Global reach: Deploying applications in new regions or countries is simple by spinning up a VM or container in the nearest cloud region. This “cloud on tap” means global market expansion without local data center investments.

Scalability for unpredictable workloads: The cloud handles bursty traffic, seasonal demand, or rapid business growth, where provisioning on-premises infrastructure would be financially prohibitive and logistically impossible.

These core strengths make the cloud the ideal platform for workloads that benefit from massive scale and centralized data processing:

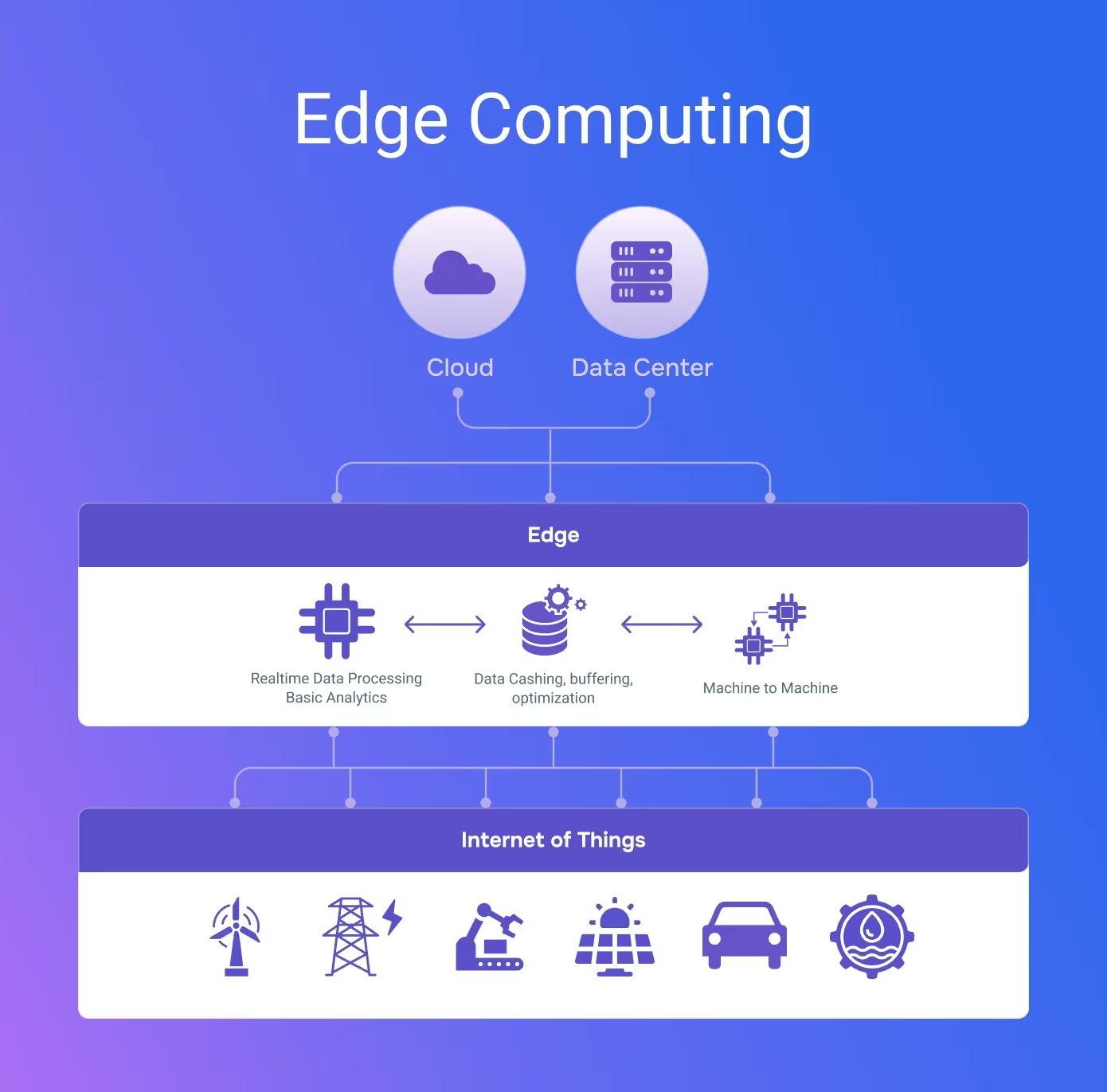

Edge computing processes data locally at the source instead of sending it to distant data centers. This reduces latency and bandwidth requirements when transferring large data volumes to a processing center, which is critical for time-sensitive applications.

Beyond the basic definition, several advanced factors drive the adoption of edge computing, each addressing a specific limitation of a purely centralized cloud model.

Many edge applications demand real-time or near-real-time response (sub-10ms). Autonomous vehicles, robotics, industrial automation, AR/VR, and high-frequency trading systems cannot tolerate the round-trip delay to a distant cloud. Deploying inference or control logic at the edge brings decision-making within milliseconds.

Billions of IoT devices generate torrents of data. Transferring all that raw data to the cloud is expensive and often unnecessary. Edge devices can perform local filtering or compression before sending data, which reduces bandwidth and cloud storage costs.

Edge often bridges traditional IT and Operational Technology (factory PLCs, SCADA). This convergence demands expertise in ruggedized hardware, industrial protocols, and deterministic control. Edge nodes may reside in harsh environments (extreme temperatures, dust), requiring resilient hardware and specialized security (secure boot, tamper detection).

Distributing compute increases attack surface, requiring strong device identity, secure updates, and zero-trust networking for thousands of edge devices. Best practices include per-device certificates, on-device encryption, and automated patch management. Edge can also boost security by keeping sensitive data local, reducing exposure.

Many regulations require data to remain within a geographic boundary or under specific controls. Companies ensure compliance with laws like GDPR or industry-specific mandates by processing and storing data on local edge nodes (or local cloud regions).

Edge computing thus helps meet data residency requirements (keeping sensitive data processing and storage local) when the cloud would otherwise require cross-border data flows. To enforce these rules at scale, platforms like emma automate sovereignty through provisioning workloads only in compliant regions or on local partner clouds. It helps ensure that data never leaves approved jurisdictions.

Edge nodes must gracefully handle intermittent or no connectivity. In remote or mobile scenarios, devices need to run autonomously, buffering data, queuing transmissions, or taking action offline. Reliable local operation is essential for environments like remote oil fields, maritime vessels, or rural healthcare clinics.

These architectural advantages translate directly into powerful strategic drivers that compel businesses to adopt edge computing.

Edge computing is essential for time-sensitive, data-intensive, or location-based use cases that operate in areas with limited connectivity. Examples include:

Edge computing is a subsection of cloud computing. While cloud computing involves hosting applications in a core data center, edge computing involves hosting them closer to end users, either in smaller edge data centers or on customer premises.

The following table highlights key differences between cloud and edge computing:

Rather than choosing one over the other, forward-looking architectures treat cloud and edge as layers of a unified “data fabric.” Edge nodes handle real-time tasks and pre-process data, and the cloud provides centralized analytics, long-term storage, and orchestration.

Consider an intelligent manufacturing scenario:

In this hybrid model, the edge provides immediacy and autonomy, while the cloud offers strategic intelligence and governance. Edge devices prevent failures and optimize operations on the spot, and the cloud turns those local insights into company-wide improvements.

Managing thousands of edge nodes with central cloud resources adds complexity in deployment, monitoring, security, and management. The key to solving this is not creating a new paradigm but extending proven cloud-native principles to the edge.

Unified management and orchestration

Instead of juggling disparate tools, the goal is a "single pane of glass." A holistic multi-cloud management platform like emma provides this unified control plane. It allows organizations to manage containerized workloads consistently across their entire infrastructure using lightweight Kubernetes distributions.

Deploying software reliably to a distributed fleet requires robust automation. Modern approaches use GitOps, in which the desired state of all edge devices is defined in a central Git repository.

CI/CD pipelines then automatically handle canary deployments and rollbacks, ensuring updates are safe and consistent without manual intervention.

Ensuring consistent data across cloud and edge is complex. Edge nodes cache data offline, then sync with cloud databases once online. Conflict resolution and eventual consistency models are necessary. Stream processing frameworks like Kafka can extend to the edge with local brokers that replicate to the cloud cluster.

Maintaining uniform security and compliance policies across a distributed space is critical. While cloud identity systems can be extended outward, each edge device also requires a hardware root of trust and on-device encryption.

This is where a unified management platform like emma is key to enforcing governance by ensuring that workloads tagged with specific compliance rules are deployed only to approved geographic locations.

Designing for the future means architecting a hybrid system that intelligently distributes workloads across the cloud-to-edge continuum. This strategic decision hinges on a few key principles:

Ready to unify your cloud and edge strategy? Request a demo to see how emma provides a single control plane to manage your entire distributed infrastructure.