To navigate today's tech landscape, which is shaped by AI and cybersecurity, it's essential to understand the interplay between these two technologies and their emerging alternatives.

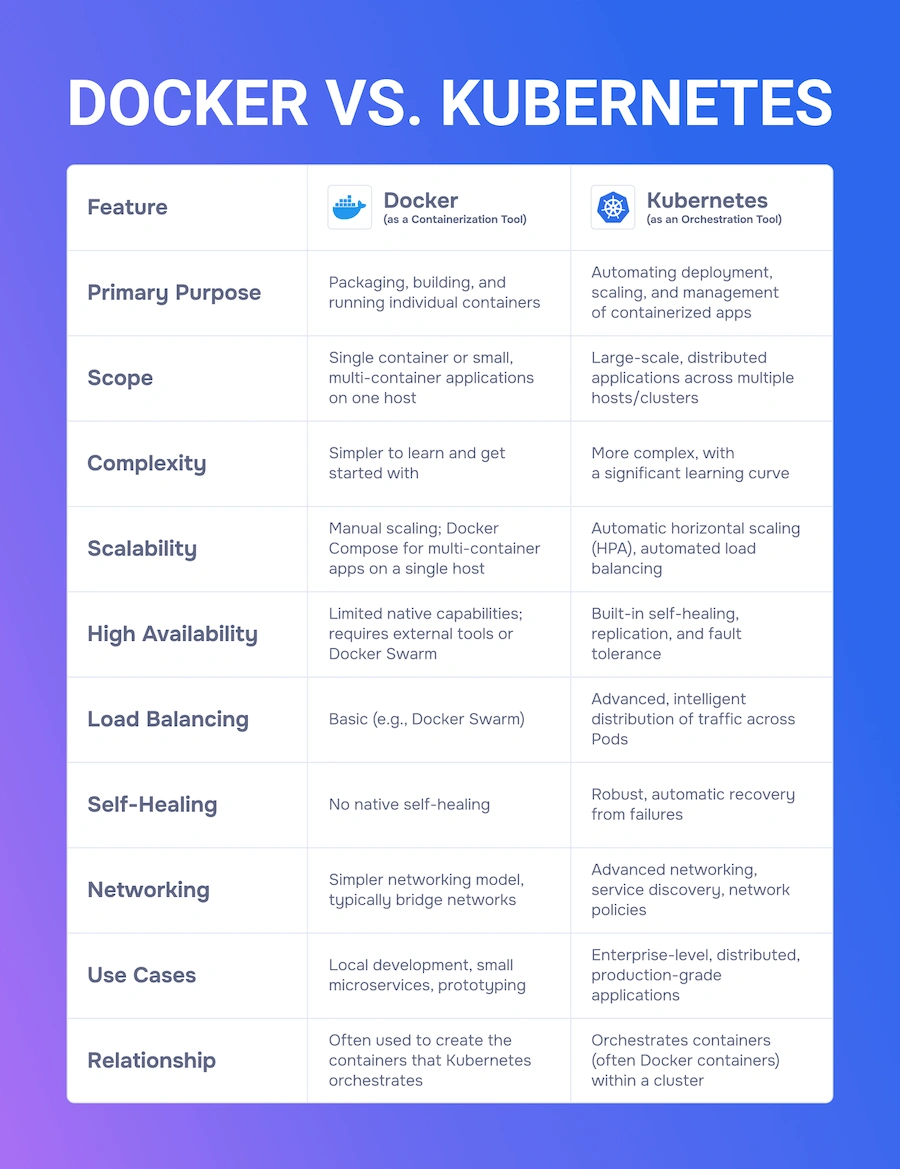

In cloud-native computing two names have long dominated the conversation: Docker and Kubernetes. Docker allows you to create and deploy containers, while Kubernetes helps manage them at scale. So, do you need one or the other or necessarily both? Well, speaking from the trenches, it’s less of a gladiatorial combat and more of a meticulously orchestrated ballet. These are synergistic tools, each playing a critical, distinct role in shaping the modern digital infrastructure.

Today, in mid-2025, as we navigate a landscape redefined by AI integration, heightened cybersecurity threats, and the relentless pursuit of scalability, understanding the nuanced technical interplay between Docker and Kubernetes – their roles and emerging alternatives – is strategically imperative. So, let's peel back the layers and truly understand the tech that, to this day is, is driving innovation.

Docker is the pioneer of containerization. Imagine an ultra-lightweight, self-contained box that holds everything your application needs to run: your code, all its dependencies (runtimes, libraries, system tools), and configuration files. This "box" is a container, and Docker provides the platform to build, ship, and run these incredibly portable units.

This is the heart of Docker, comprising the Docker Daemon (which runs on the host machine), a REST API to interact with the Daemon, and the CLI (Command Line Interface) client. When you type docker run, the CLI sends the command via the API to the Daemon.

These are the blueprints. An image is a read-only template built from a Dockerfile. Think of a Dockerfile as a recipe:

FROM node:20-alpine # Base image

WORKDIR /app # Set working directory

COPY package*.json ./ # Copy package files

RUN npm install # Install dependencies

COPY . . # Copy application code

EXPOSE 3000 # Port exposure

CMD ["npm", "start"] # Command to runEach instruction in a Dockerfile creates a read-only layer in the image. This layering is crucial for efficiency – if you only change your application code (the top layer), Docker only rebuilds and redistributes that tiny new layer, saving bandwidth and build time.

While "Docker" often refers to the entire platform, the actual execution of containers is handled by a lower-level component called containerd. Docker acquired and then open-sourced containerd, making it a robust, industry-standard component that implements the Open Container Initiative (OCI) runtime and image specifications.

This modularity means Kubernetes (and other orchestrators) can also use containerd directly, decoupling the container runtime from the broader Docker platform.

Docker provides several built-in network drivers for containers to communicate:

Containers are ephemeral by nature – data inside them is lost when they are deleted. Docker Volumes provide a way to persist data, decoupling storage from the container's lifecycle. These can be managed volumes (managed by Docker) or bind mounts (directly mapping a host path).

Docker's intuitive CLI, integrated development experience (especially with Docker Desktop), and robust image management make it the undisputed champion for local development, rapid prototyping, and small-scale deployments. It ensures consistency from your laptop to a single server, making "but it works on my machine" a relic of the past.

While powerful for individual applications or smaller setups, managing a large number of Docker containers across multiple hosts manually becomes incredibly complex. Docker, by itself, doesn't offer inherent capabilities for automated scaling, self-healing, or advanced load balancing for complex, distributed systems. This is where the concept of orchestration enters the picture.

Kubernetes (K8s) emerged to address the challenges of managing containerized applications at scale. It's an open-source platform designed for container orchestration – automating the deployment, scaling, and management of containerized workloads, especially in distributed environments.

If Docker provides the building blocks (containers), Kubernetes provides the intelligent, automated factory floor to assemble, manage, and scale them.

The smallest deployable units in Kubernetes. A Pod encapsulates one or more containers (which might share IP addresses, IPC, hostname, and other resources), storage resources, and unique network IP. Common patterns include sidecar containers (e.g., a logging agent running alongside the main application container within the same Pod).

A higher-level abstraction that manages the deployment and scaling of Pods. You define the desired state (e.g., "I want 3 replicas of this application"), and the Deployment controller ensures that state is maintained, facilitating rolling updates and rollbacks.

Provide a stable network endpoint for a set of Pods. Since Pods are ephemeral and can be created/destroyed frequently, a Service provides a consistent IP address and DNS name.

Manages external access to services within a cluster, typically HTTP/S. It provides HTTP routing based on host or path, handling SSL termination and proxying to Services.

Used to decouple configuration data and sensitive information (passwords, API keys) from application code. They are injected into Pods as environment variables or mounted files, providing secure and dynamic configuration.

Address the challenge of stateful applications in an ephemeral container world.

PV: An abstract representation of a piece of storage in the cluster, provisioned by an administrator or dynamically. It could be an NFS share, iSCSI, or cloud-specific storage like AWS EBS.

PVC: A user's request for storage. A developer defines how much storage and what kind (e.g., ReadWriteOnce, ReadOnlyMany), and Kubernetes attempts to bind it to a suitable PV.

StorageClass: Defines different "classes" of storage (e.g., fast SSDs, cheaper HDDs) and parameters for dynamic provisioning, abstracting the underlying storage details.

Kubernetes offers powerful built-in autoscaling:

Horizontal Pod Autoscaler (HPA): Scales the number of Pod replicas based on observed CPU utilization, memory usage, or custom metrics.

Vertical Pod Autoscaler (VPA): (Still often in beta/alpha, depending on Kubernetes version) Adjusts the CPU and memory requests/limits for individual Pods based on their historical usage, optimizing resource allocation without changing the number of Pods.

Cluster Autoscaler: Scales the number of nodes in your cluster up or down, based on pending Pods or underutilized nodes, typically interacting with your cloud provider's APIs.

Kubernetes operates on a Master-Worker node architecture, constantly working to maintain your desired state.

These components make global decisions about the cluster (e.g., scheduling), detect and respond to cluster events, and store the cluster's state.

The front-end of the Kubernetes control plane. It exposes the Kubernetes API, which is the brain's main interface. All internal and external communications go through the API server.

A highly available key-value store that serves as Kubernetes' backing store for all cluster data. It stores the cluster's configuration data, state, and metadata. Crucially, if etcd goes down, your cluster essentially loses its memory.

Watches for newly created Pods that have no assigned node and selects a node for them to run on. It considers resource requirements, hardware/software/policy constraints, affinity and anti-affinity specifications, and more.

Runs various controllers that regulate the state of the cluster. Each controller is a control loop that constantly monitors the shared state of the cluster through the API server and makes changes attempting to move the current state towards the desired state. Examples include the Node Controller, Replication Controller, Endpoints Controller, and Service Account Controller.

Integrates with cloud providers to manage cloud resources (e.g., provisioning load balancers, managing cloud block storage, creating external DNS records).

These are the machines where your actual containerized applications (within Pods) run.

An agent that runs on each node in the cluster. It ensures that containers are running in a Pod. It communicates with the Control Plane, reports node status, and receives instructions for managing Pods and their containers.

A network proxy that runs on each node and maintains network rules on nodes. These rules allow network communication to your Pods from network sessions inside or outside of the cluster. It performs simple TCP/UDP stream forwarding or round-robin TCP/UDP forwarding across a set of backends.

The software responsible for running containers (e.g., containerd, CRI-O, or even Docker Engine, though the latter is less common for runtime in modern K8s). It pulls images from registries, unpacks them, and runs the application process.

Kubernetes shines in environments demanding high availability, automated resilience, and flexible scaling. It's the strategic choice for complex microservices architectures, managing distributed workloads across multi-cloud or hybrid environments, and enforcing strict governance and security policies at scale. Its declarative API model allows GitOps-driven deployments, promoting reliability and traceability.

Despite its immense power, Kubernetes has a steeper learning curve and a higher initial complexity compared to simply using Docker. Setting up, configuring, and managing Kubernetes clusters can be resource-intensive, requiring specialized skills. However, the benefits for large-scale, mission-critical applications often outweigh this initial investment

As of mid-2025, the synergy between Docker and Kubernetes is stronger than ever, evolving with emerging trends:

Docker is enabling easier packaging of AI agents and local LLM inference setups, especially for building, experimenting, and deploying in local or isolated environments.

Meanwhile, Kubernetes is rapidly becoming the go-to platform for orchestrating complex AI/ML workflows end-to-end, from model training to inference. Its ability to manage GPU resources, scale dynamically, and integrate with data pipelines makes it ideal for these demanding workloads.

With increasing cyber threats, the security of container images and the supply chain is paramount. Innovations like Sigstore (for image signing and verification) and Software Bills of Materials (SBOMs) generated during Docker builds are becoming standard practice. Kubernetes integrates with admission controllers and policy engines (e.g., OPA Gatekeeper) to enforce security policies on deployments, ensuring only trusted images run.

For developers seeking even more abstraction, serverless platforms built on Kubernetes are gaining traction. They allow you to deploy containerized applications without managing server infrastructure, automatically scaling containers down to zero when idle – a true "pay-per-use" model for containers.

While not replacing Docker, WebAssembly is emerging as a complementary technology for ultra-lightweight, high-performance sandboxing, particularly for edge computing and specialized workloads. Expect to see Kubernetes orchestrating environments where Wasm modules run alongside traditional containers.

The complexity of self-managing Kubernetes clusters means that managed services will continue to dominate. These services abstract away the control plane management, allowing organizations to focus on their applications rather than the underlying infrastructure.

.webp)

So, Docker versus Kubernetes? That’s probably not the right question. The better question would be: What can I do with one without the other? And when do I need both working together?

Docker provides standardized, portable, and efficient application packaging. Kubernetes provides the robust, automated, and scalable infrastructure to run and manage those packages across any environment.

For your single-developer project or a simple web app, Docker might be all you need to get started. But when your ambition grows, when your application needs to handle millions of users, survive node failures, or integrate seamlessly into a complex CI/CD pipeline, Kubernetes steps in as the essential orchestration layer. That said, you’ll still need Docker to create the container images Kubernetes runs.

Embrace the synergy. Master Docker for creating and perfecting your encapsulated applications, and then leverage Kubernetes to orchestrate them into a resilient, scalable, and highly available powerhouse. Yes, newer technologies are emerging, but Docker and Kubernetes remain foundational, indispensable. If you want to be part of the next wave of cloud-native innovation, these are the skills you absolutely need to perfect.