Need GPUs? Ship without a waitlist.

Get started

Apache Spark is an open-source, distributed processing system used for big data workloads.

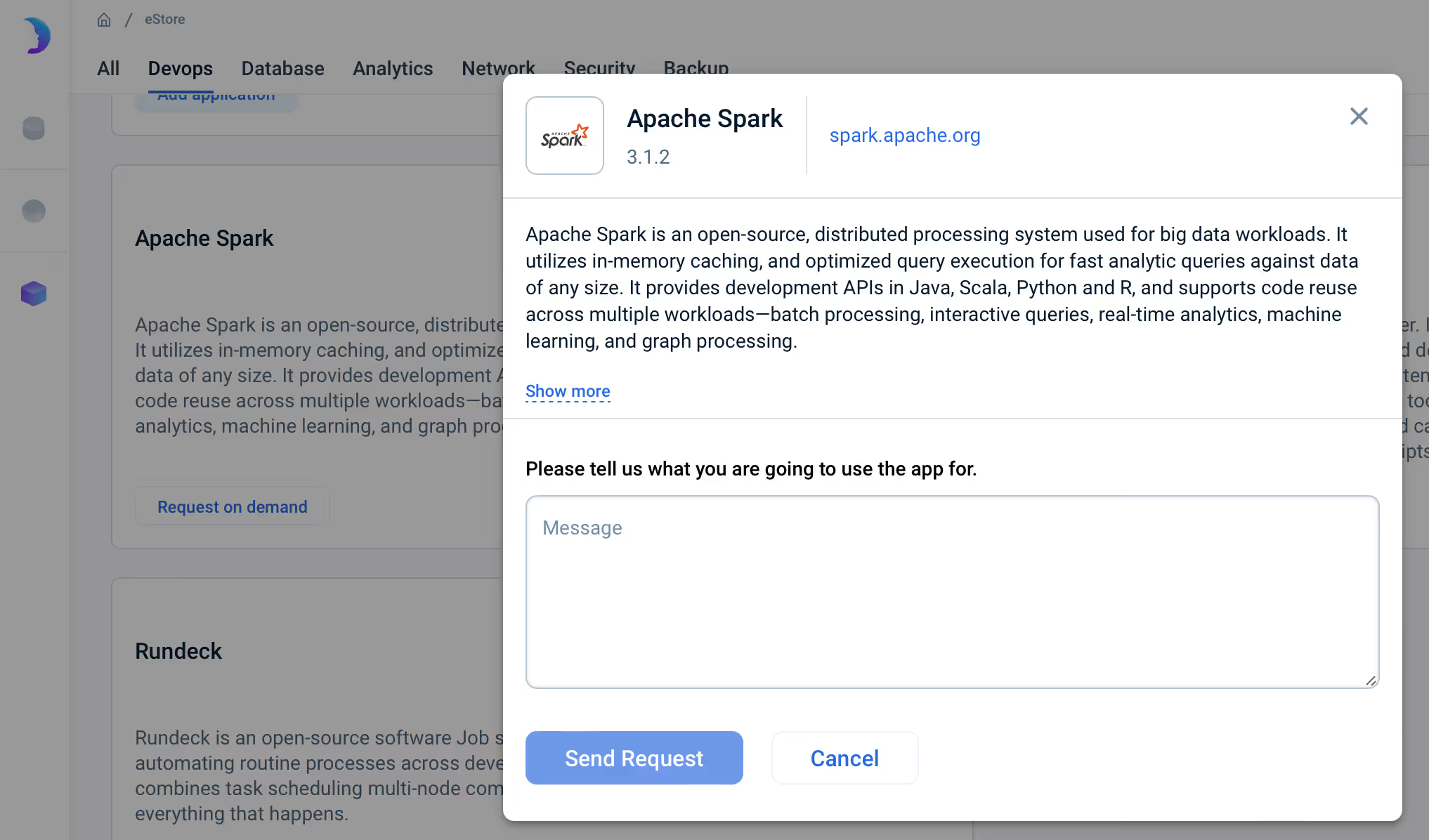

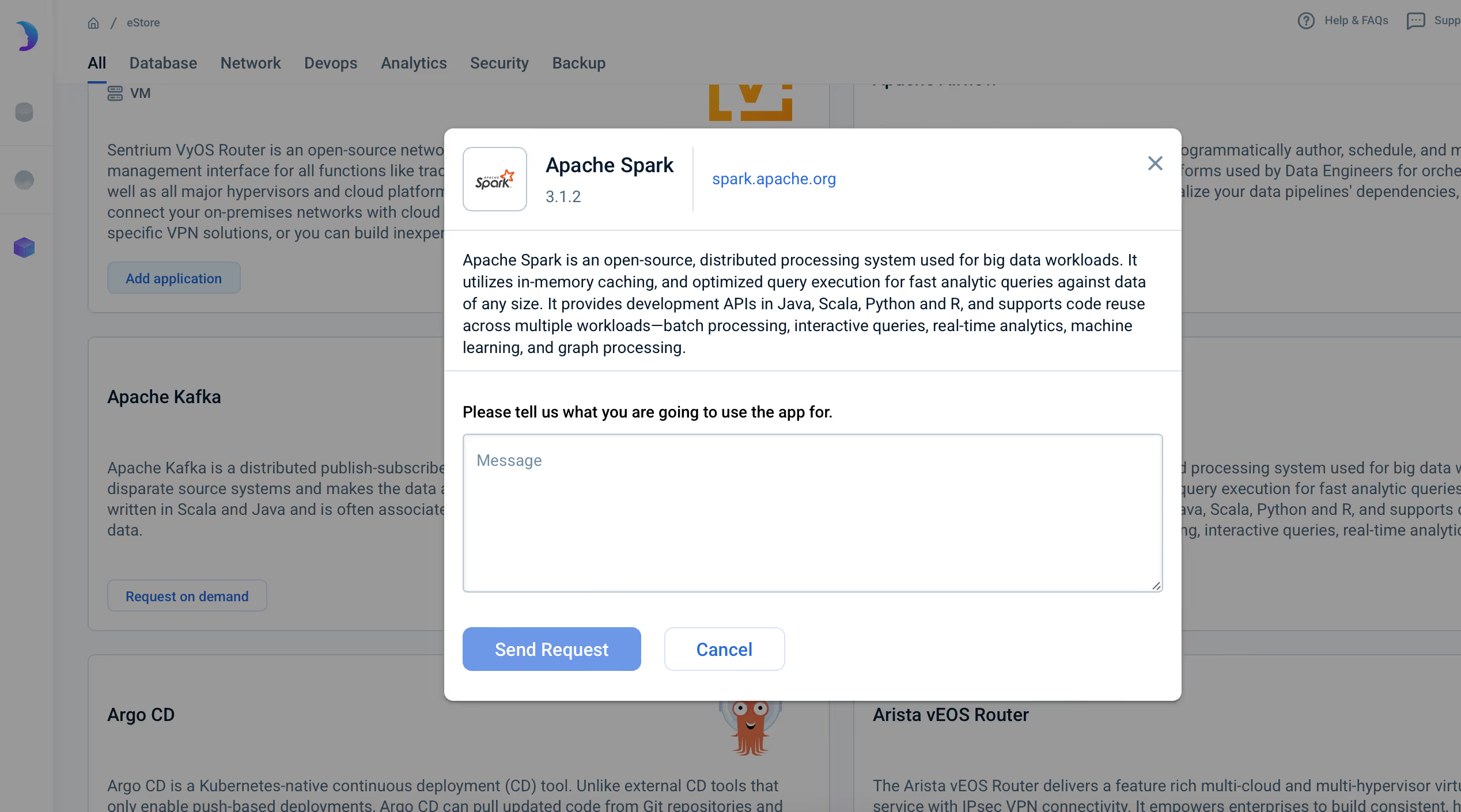

Apache Spark is an open-source, distributed processing system used for big data workloads. It utilizes in-memory caching, and optimized query execution for fast analytic queries against data of any size. It provides development APIs in Java, Scala, Python and R, and supports code reuse across multiple workloads – batch processing, interactive queries, real-time analytics, machine learning, and graph processing.

With Apache Spark, you can:

Apache Spark can be deployed in various environments, including cloud platforms, Kubernetes, and local, on-premise infrastructure. Cloud providers like AWS, Azure, and GCP, all offer native managed Spark services.

By integrating Spark with emma, teams can:

Integrate Apache Spark with emma to ensure efficient big data processing, cost-effective infrastructure use, and better visibility across distributed cloud environments.